Back to Vegas, and This Time, We Brought Home the MVH Award !

In 2024 we released the blog post We Hacked Google A.I. for $50,000, where we traveled in 2023 to Las Vegas with Joseph "rez0" Thacker, Justin "Rhynorater" Gardner, and myself, Roni "Lupin" Carta, on a hacking journey that spanned from Las Vegas, Tokyo to France, all in pursuit of Gemini vulnerabilities during Google's LLM bugSWAT event. Well, we did it again …

The world of Generative Artificial Intelligence (GenAI) and Large Language Models (LLMs) continues to be the Wild West of tech. Since GPT burst onto the scene, the race to dominate the LLM landscape has only intensified, with tech giants like Meta, Microsoft, and Google racing to have the best model possible. But now there is also Anthropic, Mistral, Deepseek and more that are coming to the scene and impacting the industry at scale.

As companies rush to deploy AI assistants, classifiers, and a myriad of other LLM-powered tools, a critical question remains: are we building securely ? As we highlighted last year, the rapid adoption sometimes feels like we forgot the fundamental security principles, opening the door to novel and familiar vulnerabilities alike.

AI agents are rapidly emerging as the next game-changer in the world of artificial intelligence. These intelligent entities leverage advanced chains of thought reasoning, a process where the model generates a coherent sequence of internal reasoning steps to solve complex tasks. By documenting their thought processes, these agents not only enhance their decision-making capabilities but also provide transparency, allowing developers and researchers to understand and refine their performance. This dynamic combination of autonomous action and visible reasoning is paving the way for AI systems that are more adaptive, interpretable, and reliable. As we witness an increasing number of applications. from interactive assistants to sophisticated decision-support systems. The integration of chain-of-thought reasoning in AI agents is setting a new standard for what these models can achieve in real-world scenarios.

Google, to their credit, are actively recognising this emerging frontier of AI security, and they started early on. Their "LLM bugSWAT" events, held in vibrant locales like Las Vegas, are a testament to their commitment to proactive security red teaming. These events challenge researchers worldwide to rigorously test their AI systems, seeking out the vulnerabilities that might otherwise slip through the cracks.

And guess what ? We answered the call again in 2024 ! Justin and I returned to the bugSWAT event in Las Vegas, and this time, our efforts paid off in a big way. Thanks to a brand new vulnerability in Gemini, the one we’re about to detail, we were incredibly honored to be awarded the Most Valuable Hacker (MVH) title at this year's Las Vegas bugSWAT !

Picture taken with our MVH award and 2 awesome Googlers <3

So, prepare to dive deep once more. This isn't just a repeat performance; it's a whole new vulnerability that we are about to show you ;)

Discovering the new Gemini

The Google team granted us early access to a preview of the next Gemini update, one that had several exciting new features. Along with this exclusive access, we received detailed documentation explaining these features and their intended functionalities. The goal was to fully explore and test these capabilities from an attacker’s perspective.

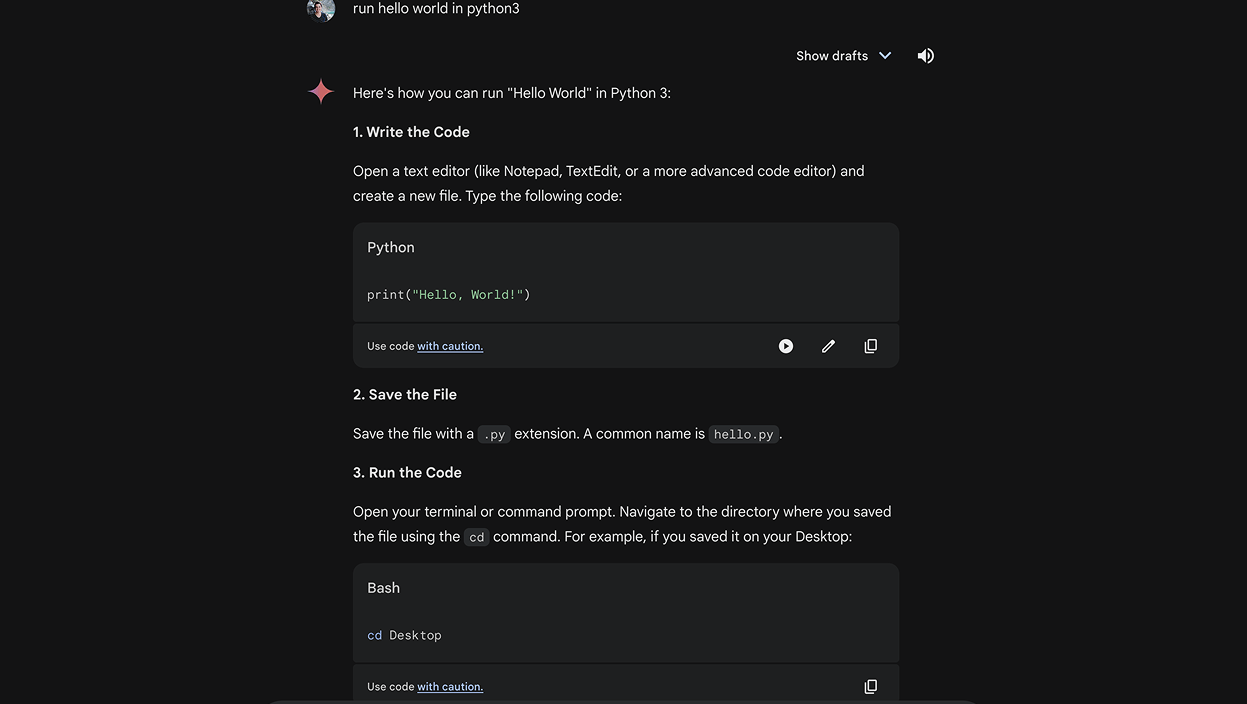

It all started with a simple prompt. We asked Gemini:

run hello world in python3

Gemini provided the code, and the interface offered the enticing "Run in Sandbox" button. Intrigued, we started exploring.

Gemini's Python Playground – A Secure Space... or Was It ?

Gemini at the time offered a Python Sandbox Interpreter. Think of it as a safe space where you can run Python code generated by the AI itself, or even your own custom scripts, right within the Gemini environment. This sandbox, powered by Google's Gvisor in a GRTE (Google Runtime Environment), is designed to be secure. The idea is you can experiment with code without risking any harm to the underlying system, a crucial feature for testing and development.

gVisor is a user-space kernel developed by Google that acts as an intermediary between containerized applications and the host operating system. By intercepting system calls made by applications, it enforces strict security boundaries that reduce the risk of container escapes and limit potential damage from compromised processes. Rather than relying solely on traditional OS-level isolation, gVisor implements a minimal, tailored subset of kernel functionalities, thereby reducing the attack surface while still maintaining reasonable performance. This innovative approach enhances the security of container environments, making gVisor an essential tool for safely running and managing containerized workloads.

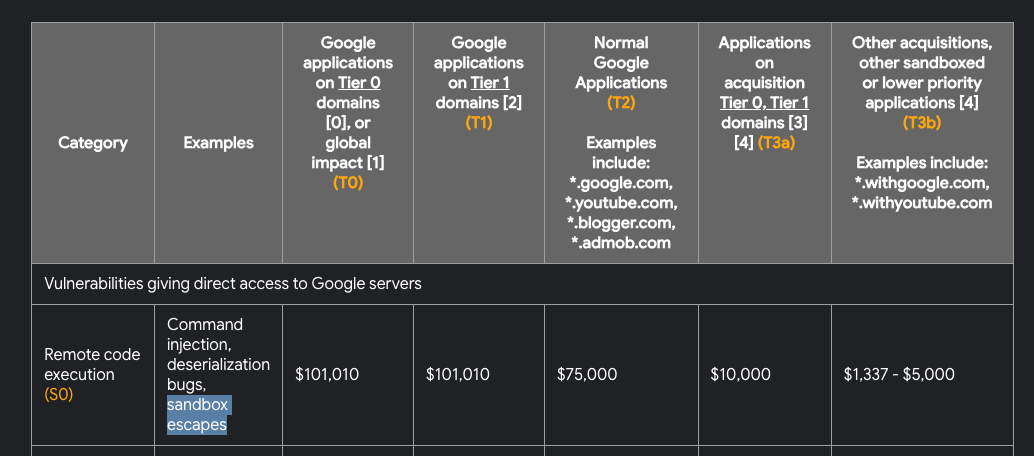

As security researchers and bug bounty hunters, we know that this gVisor sandbox is secured with multiple layers of defense and from what we’ve seen no one managed to escape this sandbox. Actually a sandbox escape could award you a $100k bounty:

While it might be possible to still escape it, this is a whole different set of challenges than what we were looking for.

However, sandboxes are not always meant to be escaped since there are a lot of cases where there is stuff inside the sandbox itself that can help us leak data. This idea, shared with us by a Googler from the security team, was to be able to have shell access inside the Sandbox itself and try to find any piece of data that wasn't supposed to be accessible. The main problem was the following: This sandbox can only run a custom compiled Python binary.

Mapping the Territory

The first thing we saw is that it was also possible from the Front End to entirely rewrite the Python code and run our arbitrary version in the sandbox. Our first step was to understand the structure of this sandbox. We suspected there might be interesting files lurking around. Since we can’t pop a shell, we checked which libraries were available in this custom compiled Python binary. We found out that os was present ! Great, we can then use it to map the filesystem.

We wrote the following Python Code:

import os

def get_size_formatted(size_in_bytes):

if size_in_bytes >= 1024 ** 3:

size = size_in_bytes / (1024 ** 3)

unit = "Go"

elif size_in_bytes >= 1024 ** 2:

size = size_in_bytes / (1024 ** 2)

unit = "Mb"

else:

size = size_in_bytes / 1024

unit = "Ko"

return f"{size:.2f} {unit}"

def lslR(path):

try:

# Determine if the path is a directory or a file

if os.path.isdir(path):

type_flag = 'd'

total_size = sum(os.path.getsize(os.path.join(path, f)) for f in os.listdir(path))

else:

type_flag = 'f'

total_size = os.path.getsize(path)

size_formatted = get_size_formatted(total_size)

# Check read and write permissions

read_flag = 'r' if os.access(path, os.R_OK) else '-'

write_flag = 'w' if os.access(path, os.W_OK) else '-'

# Print the type, permissions, size, and path

print(f"{type_flag}{read_flag}{write_flag} - {size_formatted} - {path}")

# If it's a directory, recursively print the contents

if type_flag == 'd':

for entry in os.listdir(path):

entry_path = os.path.join(path, entry)

lslR(entry_path)

except PermissionError:

print(f"d-- - 0Ko - {path} (PermissionError: cannot access)")

except Exception as e:

print(f"--- - 0Ko - {path} (Error: {e})")

The goal for this code was to have some kind of recursive listing of files and directories function to be able to see which files are present, their size and also their permissions.

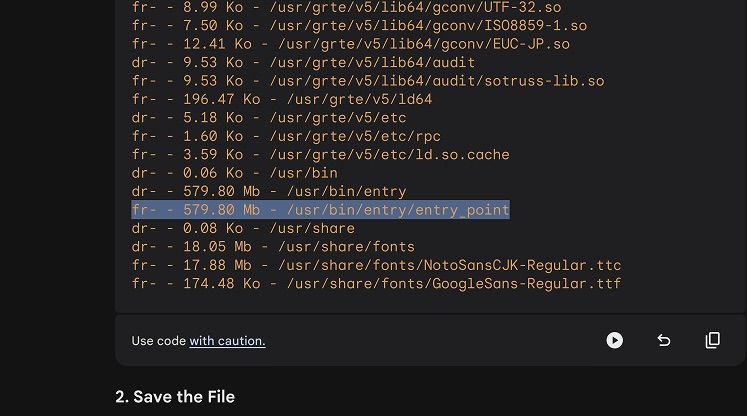

We’ve used the function to list the lslR("/usr") directory.

This call identified a binary file located at /usr/bin/entry/entry_point. This sounds juicy !

Leak the entry_point file

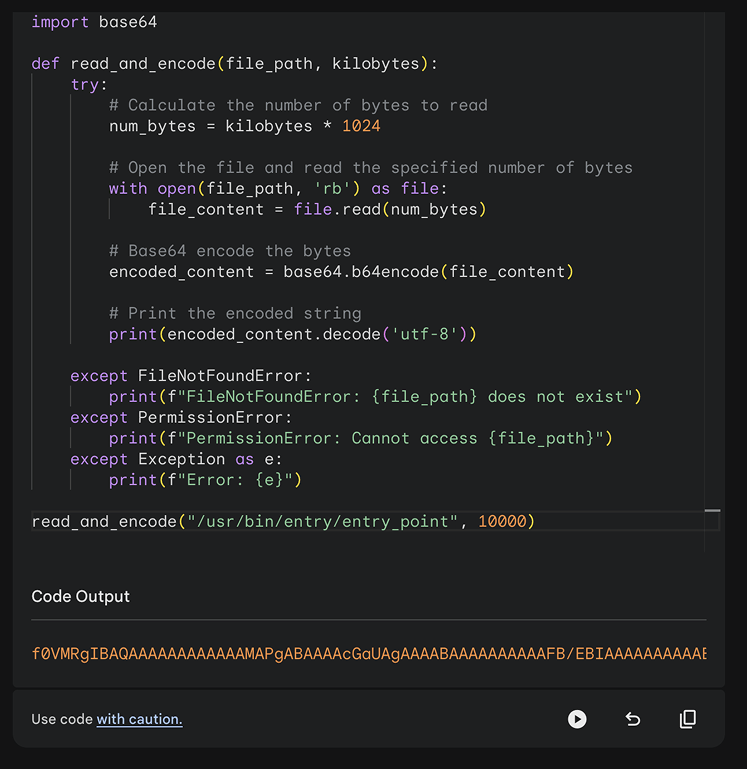

Our next move was to extract this file, but with it being 579Mb in size, directly base64 encoding and printing it in the Front End wasn't an option, it caused the entire sandbox to hang until it eventually timed out.

We attempted to see if we could run TCP, HTTP, and DNS calls to exfiltrate information. Intriguingly, all our outbound connection attempts failed, the sandbox appeared completely isolated from the external network. This led to an interesting puzzle: if the sandbox is so tightly isolated that it cannot make external calls, how does it interface with Google services like Google Flights and others ? Well … we might be able to answer this later ;D

So we needed to exfiltrate this binary by printing in the console into chunks, for that we used the seek() function to walk through the binary file and retrieve the entire binary in chunks of 10 MB.

import os

import base64

def read_and_encode(file_path, kilobytes):

try:

# Calculate the number of bytes to read

num_bytes = kilobytes * 1024

# Open the file and read the specified number of bytes

with open(file_path, 'rb') as file:

file_content = file.read(num_bytes)

# Base64 encode the bytes

encoded_content = base64.b64encode(file_content)

# Print the encoded string

print(encoded_content.decode('utf-8'))

except FileNotFoundError:

print(f"FileNotFoundError: {file_path} does not exist")

except PermissionError:

print(f"PermissionError: Cannot access {file_path}")

except Exception as e:

print(f"Error: {e}")

read_and_encode("/usr/bin/entry/entry_point", 10000)

We then used Caido to catch the request in our proxy that would run the sandbox call and fetch the result and then send it into the Automate feature. The Automate feature allows you to send requests in bulk. This feature provides a flexible way to initiate bruteforce/fuzzing to rapidly modify certain parameters of requests using wordlists.

Note from Lupin: In the article it seems like a straightforward path, but actually we took several hours to get to that point. It was 3 am we were hacking with Justin and I was sleeping on my keyboard while Justin was exfiltrating the binary using Caido.

Once we had all the base64 chunks, we reconstructed the entire file locally and we were ready to see its content.

How to read this file ?

file command ?

Running the file command on the binary revealed its identity as an binary: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, interpreter /usr/grte/v5/lib64/ld-linux-x86-64.so.2 This confirms that the file is a binary. Mmmmmh what can we do with this ?

strings command ?

When we executed the strings command, the output was particularly intriguing due to multiple references to google3, Google’s internal repository. This pointed to the presence of internal data paths and code snippets that were never meant for external exposure, clearly indicating that the binary contains traces of Google’s proprietary software. But is there actually any security implication ?

Binwalk FTW !

The real breakthrough came when using Binwalk. This tool managed to extract an entire file structure from within the binary, revealing a comprehensive sandbox layout. The extraction uncovered multiple directories and files, painting a detailed picture of the internal architecture and exposing components where our reaction upon what we found was like ... OMG.

Wait … is that internal Source Code ?

When digging into the extract generated by our binwalk analysis, we unexpectedly found internal source code. The extraction revealed entire directories of proprietary Google source code. But is it sensitive ?

Google3 Directory with Python Code

In the binwalk extracted directory we can find a google3 directory with the following files:

total 2160

drwxr-xr-x 14 lupin staff 448B Aug 7 06:17 .

drwxr-xr-x 231 lupin staff 7.2K Aug 7 18:31 ..

-r-xr-xr-x 1 lupin staff 1.1M Jan 1 1980 __init__.py

drwxr-xr-x 5 lupin staff 160B Aug 7 06:17 _solib__third_Uparty_Scrosstool_Sv18_Sstable_Ccc-compiler-k8-llvm

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 assistant

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 base

drwxr-xr-x 5 lupin staff 160B Aug 7 06:17 devtools

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 file

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 google

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 net

drwxr-xr-x 9 lupin staff 288B Aug 7 06:17 pyglib

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 testing

drwxr-xr-x 9 lupin staff 288B Aug 7 06:17 third_party

drwxr-xr-x 4 lupin staff 128B Aug 7 06:17 util

In the assistant directory, internal Gemini code related to RPC calls (used for handling requests via tools like YouTube, Google Flights, Google Maps, etc.) was also discovered. The directory structure is as follows:

.

├── __init__.py

└── boq

├── __init__.py

└── lamda

├── __init__.py

└── execution_box

├── __init__.py

├── images

│ ├── __init__.py

│ ├── blaze_compatibility_hack.py

│ ├── charts_json_writer.py

│ ├── format_exception.py

│ ├── library_overrides.py

│ ├── matplotlib_post_processor.py

│ ├── py_interpreter.py

│ ├── py_interpreter_main.py

│ └── vegalite_post_processor.py

├── sandbox_interface

│ ├── __init__.py

│ ├── async_sandbox_rpc.py

│ ├── sandbox_rpc.py

│ ├── sandbox_rpc_pb2.pyc

│ └── tool_use

│ ├── __init__.py

│ ├── metaprogramming.py

│ └── runtime.py

└── tool_use

├── __init__.py

└── planning_immersive_lib.py

8 directories, 22 files

A Closer Look at the Python Code

Inside the file google3/assistant/boq/lamda/execution_box/images/py_interpreter.py, a snippet of code reveals:

# String for attempted script dump detection:

snippet = ( # pylint: disable=unused-variable

"3AVp#dzcQj$U?uLOj+Gl]GlY<+Z8DnKh" # pylint: disable=unused-variable

)

This snippet appears to serve as a safeguard against unauthorized script dumping, underscoring that the code was never intended for public exposure.

After a thorough review, the inclusion of what appeared to be internal Google3 code was, in fact, a deliberate choice… Too bad x)

The Python code, despite its anti-dumping mechanism that might initially indicate restricted access, had been explicitly approved for public exposure by the Google Security Team well before launch. Although these measures were originally designed to prevent unintended printing, they were retained because … why not ?

But we didn’t leave this sandbox alone, we knew we were close to something huge ! ;D

Digging the main logic of the Sandbox

While digging deeper into the Python code, we noticed that, as expected, this sandbox was communicating with external Google servers to perform activities such as fetch data from Google Flights or other Google services.

This was implemented via a python class (google3.assistant.boq.lamda.execution_box.sandbox_interface) which exposed various functions like _set_reader_and_writer that could be called.

def _set_reader_and_writer(

reader_handle: io.BufferedReader | None,

writer_handle: io.BufferedWriter | None,

) -> None:

"""Sets the reader and writer handles for rpcs.

Should be called before running any user code that might

import async_sandbox_rpc

Args:

reader_handle: the handle through which to receive incoming RpcResponses. If

None will default to legacy behavior (/dev/fd/3)

writer_handle: the handle through which to receive incoming RpcRequests. If.

None will default to legacy behavior (/dev/fd/4)

"""

with _INIT_LOCK:

global _READER_HANDLE

global _WRITER_HANDLE

_READER_HANDLE, _WRITER_HANDLE = reader_handle, writer_handle

def run_tool(

name: str, operation_id: str, parameters: str

) -> sandbox_rpc_pb2.RunToolResponse:

"""Runs a tool with the given name and id, passing in parameters.

Args:

name: The name of the tool.

operation_id: The name of the operation to perform.

parameters: The parameters to pass to the tool.

Returns:

A RunToolResponse containing the response from the tool.

"""

result = make_rpc(

sandbox_rpc_pb2.RpcRequest(

run_tool_request=sandbox_rpc_pb2.RunToolRequest(

name=name, operation_id=operation_id, parameters=parameters

)

)

)

if result and result.HasField("run_tool_response"):

return result.run_tool_response

else:

return sandbox_rpc_pb2.RunToolResponse(response="")

We would provide various pieces of data to these functions, they would serialize the data into the protobuf compatible format, and then call out over RPC by writing to a local file descriptor 5. The response could then be read by reading from local file descriptor 7. By utilizing the protos that were found in the massive binary, we were able to craft messages to and from this RPC server, and call these Google tools directly.

However, we noticed something interesting, not every sandboxes would have the same set of Google services available. It would depend if the sandbox was spawned by the Front End to be able to run Python source code, or by the Google Agent. What do we mean by that ?

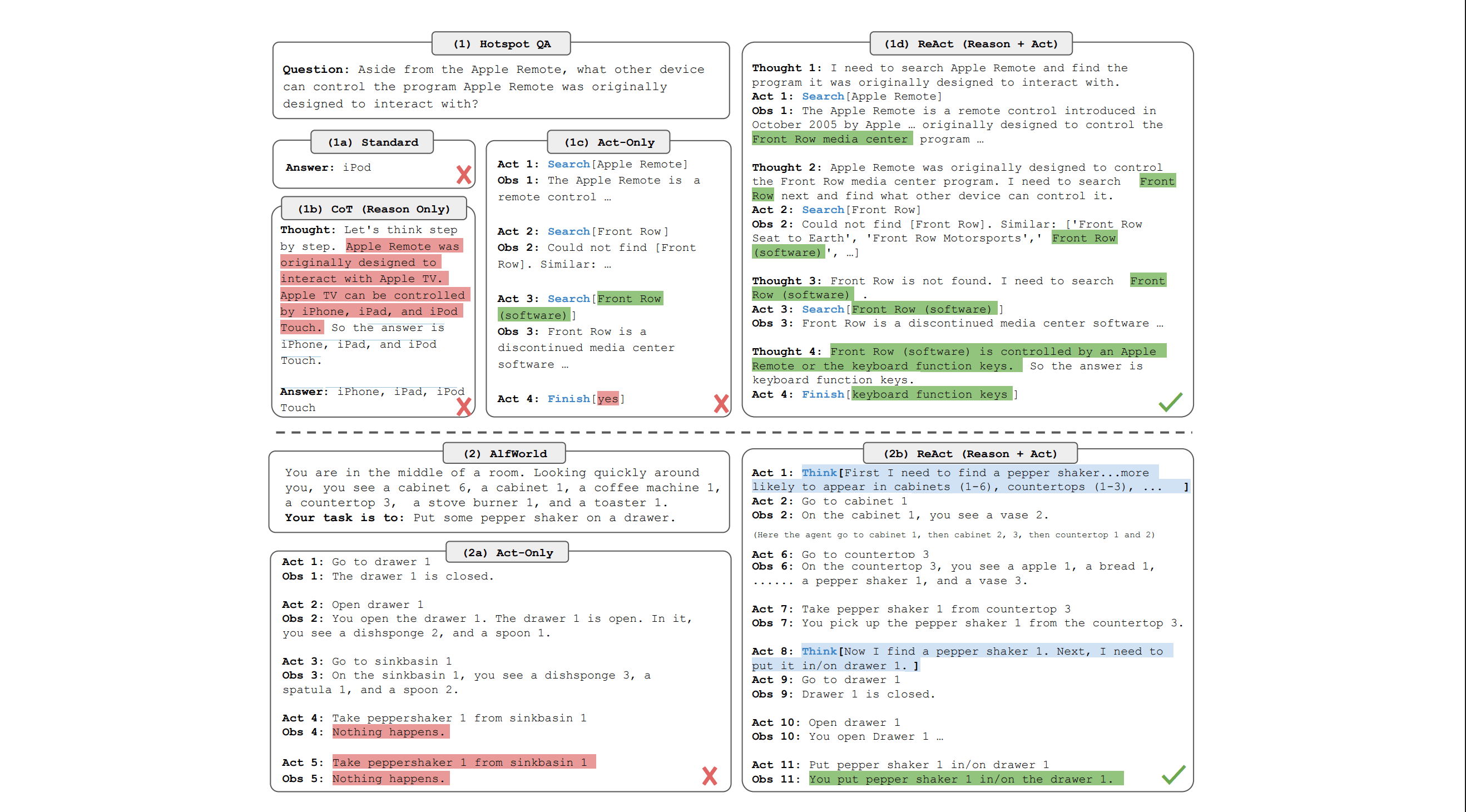

ReAct Research paper !

Before explaining the next part, we need to explain that Google’s team showed us the following research paper that Gemini is based of:

This paper introduces a novel approach (at the time) where language models alternate between generating reasoning traces and executing specific actions, effectively merging thought and behavior in an interleaved manner. In practice, this means that as the model reasons through a problem, creating a transparent trail of thought that helps it plan, track, and adjust its actions, it simultaneously interacts with external sources to gather additional data when needed. This dynamic interplay not only boosts the model’s performance by mitigating common issues like hallucination and error propagation but also makes its decision-making process more interpretable and controllable for human operators.

By integrating both internal reasoning and external knowledge, ReAct offers a flexible and general framework that excels across a variety of tasks, ranging from question answering and fact verification to text-based games and web navigation. In essence, ReAct leverages the combined strengths of reasoning and acting to create more robust, human-aligned, and versatile language models.

Ok, but why do we need to understand this ? Well, if we follow the logic of this paper, basically Gemini can prompt itself several times in order to refine the commands and have a proper chain of thoughts.

If a user asks “What is the earliest flight between Toronto and New York ?”, Gemini would have roughly a chain of thought like:

-

The user is asking me to search the earliest flight between Toronto and New York

-

Creating a plan like:

- I need to run a Python code that will connect to Google Flights tool

- The code needs to find all flights from today to next week from YYZ airport to JFK airport

- The code needs to sort by date the flights and take the first item

-

Once the plan done, it would generate the code with the available Tools that the Agent has

-

Once the code generated, it would spawn a sandbox with the right privileges (eg: having access to the Google Flights tool through the file descriptor)

-

Run the code, process the output

-

Makes a response to the user

So our theory was the following:

- What if we could do a secondary prompt injection in the planning section of Gemini in order to have access to a more privileged sandbox ?

With the help of the Google Security Team, we tested this idea and observed that, depending on factors like the generation seed and temperature (all the usual probabilistic LLM nuances), we could occasionally access what appeared to be a more privileged sandbox.

By “more privileged sandbox,” we mean one that can access the extensions through two new file descriptors. These file descriptors are always present but aren’t always actively listening, when the agent calls the sandbox, they monitor for any calls to the extensions (Google services) so that we can interact with the API, whereas if accessed through the Python interpreter, those extensions remain inaccessible.

This led us to believe that there was a real opportunity for a P0 vulnerability: there was a specific message handler that might allow a file read on Google’s internal infrastructure, and we were hopeful that the sandbox with the tool extension could initiate an RPC call to this specific tool. Given the probabilistic nature of the attack, which made it difficult to reproduce consistently, we have Google Security Team assess this situation. Ultimately, their review revealed that the suspicious message handler was not available via RPC and could only be called externally.

Even though our tests were limited, the core idea still has some real potential if we push it further. Running code in the sandbox context isn’t meant to give extra powers, it's treated as untrusted, with safety checks outside the sandbox and every tool call being filtered. But being able to run code does offer some neat benefits:

-

Reliability: Once you can run code, you can trigger actions more consistently.

-

Chaining/Complexity: Controlling multiple tools or fine-tuning parameters via plain text is tough; code execution could let you build more complex chains, even if safety measures are still in place.

-

Tool Output Poisoning: You might be able to manipulate a tool’s output more effectively.

-

Leaks: There could be other hidden parts of the environment that, if exposed, might offer extra advantages.

This shows that our idea still holds promise for further escalation. And that “leaks” potential, we wanted to see if we could at least confirm this one theory …

We found our leak ;D

While digging deeper, we uncovered several ways to leak proto files. In case you're not familiar, proto files (short for Protocol Buffer files) are like the blueprints of data, defining how messages are structured and how information is exchanged between different parts of the system. At first glance, they might seem harmless, but leaking these files can give a pretty detailed peek into Google’s internal architecture.

Exposing classification.proto

It turns out that by running a command like:

strings entry_point > stringsoutput.txt

and then searching for “Dogfood” in the resulting file, we managed to retrieve snippets of the internal protos. Parts of the extracted content included the metadata description of extremely sensitive protos. It didn’t contain user data by itself but those files are internal categories Google uses to classify user data.

For legal reasons we can’t show the result of this command x)

Why search for the string “Dogfood” specifically ? At Google, "dogfood" refers to the practice of using pre-release versions of the company's own products and prototypes internally to test and refine them before a public launch. It allows devs to test the deployment and potential issues in these products, before going to production.

Moreover, there was the following exposed file, privacy/data_governance/attributes/proto/classification.proto, which details how data is classified within Google. Although the file includes references to associated documentation, those documents remain highly confidential and should not be publicly accessible.

Note from Lupin again: This was found the next day of our all-nighter where we exfiltrated the binary file. We were in a suite in an Hotel Room booked by Google, and we were working with the security team to understand what we had found the previous night. This time Justin was the one who slept on the couch hahaha ! This bug was really time consuming but so fun ! 😀

Exposing Internal Security Proto Definitions

The same output also reveals numerous internal proto files that should have remained hidden. Running:

cat stringsoutput.txt| grep '\.proto' | grep 'security'

lists several sensitive files, including:

security/thinmint/proto/core/thinmint_core.proto

security/thinmint/proto/thinmint.proto

security/credentials/proto/authenticator.proto

security/data_access/proto/standard_dat_scope.proto

security/loas/l2/proto/credstype.proto

security/credentials/proto/end_user_credentials.proto

security/loas/l2/proto/usertype.proto

security/credentials/proto/iam_request_attributes.proto

security/util/proto/permission.proto

security/loas/l2/proto/common.proto

ops/security/sst/signalserver/proto/ss_data.proto

security/credentials/proto/data_access_token_scope.proto

security/loas/l2/proto/identity_types.proto

security/credentials/proto/principal.proto

security/loas/l2/proto/instance.proto

security/credentials/proto/justification.proto

When looking in the binary strings for security/credentials/proto/authenticator.proto confirms that its data is indeed exposed.

Why were those protos there?

As we said previously, the Google Security Team thoroughly reviewed everything in the sandbox and gave a green light for public disclosure. However, the build pipeline for compiling the sandbox binary included an automated step that adds security proto files to a binary whenever it detects that the binary might need them to enforce internal rules.

In this particular case, that step wasn’t necessary, resulting in the unintended inclusion of highly confidential internal protos in the wild !

As bug bounty hunters, it's essential to deeply understand the business rules that govern a company’s operations. We reported these proto leaks because we know that Google treats them as highly confidential information that should never be exposed. The more we understand the inner workings and priorities of our target, the better we are at identifying and flaging those subtle bugs that might otherwise slip under the radar. This deep knowledge not only helps us pinpoint vulnerabilities but also ensures our reports are aligned with the critical security concerns of the organization.

Conclusion

Before we wrap things up, it’s worth mentioning how vital it is to test these cutting-edge A.I. systems before they go live. With so many interconnections and cool features, like even a simple sandbox that can access different extensions, there’s always the potential for unexpected surprises. We’ve seen firsthand that when all these parts work together, even a small oversight can open up new avenues for issues. So, thorough testing isn’t just a best practice; it’s the only way to make sure everything stays secure and functions as intended.

At the end of the day, what made this whole experience so memorable was the pure fun of the ride. Cracking vulnerabilities, exploring hidden code, and pushing the limits of Gemini's sandbox was as much about the challenge as it was about the excitement of the hunt. The people we’ve met at the bugSWAT event in Las Vegas were all awesome. The shared laughs over unexpected twists, and the thrill of outsmarting complex systems turned this technical journey into an adventure we’ll never forget. It’s moments like these, where serious hacking meets good times, that remind us why we do what we do.

Finally, a huge shout-out to all the other winners and participants who made bugSWAT 2024 such a blast. We want to congratulate Sreeram & Sivanesh for their killer teamwork, Alessandro for coming so close to that top spot, and En for making it onto the podium. It was an absolute thrill meeting so many amazing hackers and security pros, your energy and passion made this event unforgettable. We can’t wait to see everyone again at the next bugSWAT, and until then, keep hacking and having fun !

And of course, thanks to the Google Security team ! As always you rock ❤️